Controlling AI is easier than you think

Artificial intelligence is on the verge of providing huge benefits to society. But, as many have said, it may bring new threats that have never been seen before. As with technology in general, the same tools that will advance scientific discovery can also be used to create cyber, chemical or biological weapons. A dominant AI will need to share its benefits with the masses while keeping the most powerful AI out of the hands of bad actors. The good news is that there is already a template for how to do that.

In the 20th century, nations built international organizations to allow the peaceful proliferation of nuclear energy but to slow the proliferation of nuclear weapons by controlling access to materials—namely uranium and weapons-grade plutonium—that support them. The risk is managed through international institutions, such as the Nuclear Nonproliferation Treaty and the International Atomic Energy Agency. Today, 32 countries use nuclear power plants, which together provide 10% of the world’s electricity, and only nine countries have nuclear weapons.

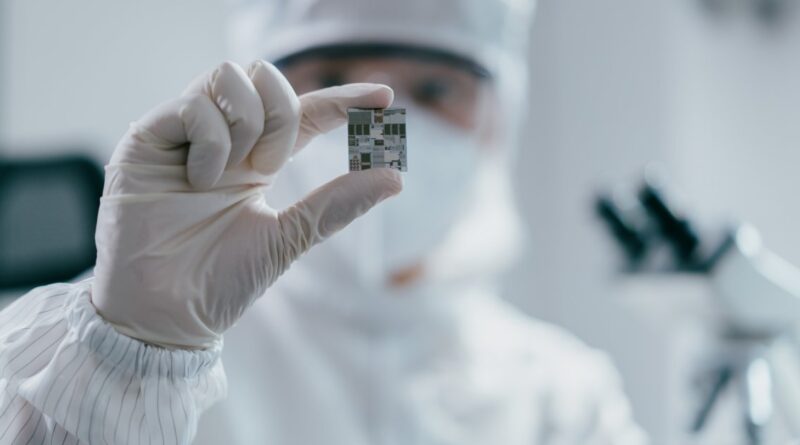

Countries can do the same for AI today. They can control the AI from the ground up by controlling access to the special chips needed to train the world’s most advanced AI models. Business leaders and even the Secretary-General of the United Nations, António Guterres, have called for an international regulatory framework for AI similar to nuclear technology.

The most advanced AI systems are trained on tens of thousands of highly specialized computer chips. These chips are placed in large data centers where they use data for months to train intelligent AI models. These advanced chips are difficult to produce, the supply chain is tightly controlled, and large numbers of them are needed to train AI models.

Governments could create a regulatory system where only authorized computer providers have access to the most advanced chips in their data centers, and only licensed, trusted AI companies have access the computing power needed to train the most capable—and most dangerous—AI types.

This may seem like a tall order. But only a few nations are needed to impose this regime. The special computer chips used to train advanced AI models are made only in Taiwan. They rely on important technology from three countries-Japan, the Netherlands and the US In some cases, one company has the power to control important parts of the chip production chain. The Dutch company ASML is the only manufacturer of extreme ultraviolet lithography machines used to make the oldest chips.

Read more: The 100 most influential people in AI 2024

Governments are already taking steps to regulate these high-tech chips. The US, Japan and the Netherlands have imposed export controls on their chip-making equipment, restricting their sales to China. And the US government has banned the sale of the most advanced chips—made with US technology—to China. The US government has also introduced requirements for cloud computing providers to identify their foreign customers and to report when a foreign customer trains a large AI model to use. for cyber attacks. And the US government has begun debating—but not yet imposing—restrictions on the most powerful types of AI trained and how widely they can be shared. While some of these restrictions are about political competition with China, the same tools can be used to control chips to prevent rival nations, terrorists or criminals from using the most powerful AI systems.

The US can work with other nations to build on this foundation to create a framework to manage computing resources across the entire life cycle of an AI model: chip makers, chips, data centers, AI training models, and trained models are the results of this production cycle.

Japan, the Netherlands and the US could help spearhead the creation of a global regulatory framework that would allow these highly specialized chips to be sold to countries that have established regulations governing computer hardware. This will include tracking the chips and keeping track of them, identifying who is using them, and making sure AI training and deployment is safe.

But global control of computing resources can do more than just keep AI out of the hands of bad actors — it can empower innovators around the world by bridging the divide between the haves and have-nots. Because the computing requirements for training advanced AI models are so high, the industry is moving towards an oligopoly. That kind of pressure is not good for society or business.

Other AI companies have also started releasing their models publicly. This works well for technological innovation, and helps level the playing field for Big Tech. But once the AI model is open source, it can be modified by anyone. Guardrails can be quickly removed.

The US government has fortunately begun to evaluate national cloud computing resources as a public good for academics, small businesses and startups. Powerful AI models can be made available in the national cloud, allowing researchers and trusted companies to use them without releasing the models online to everyone, where they can be abused.

Countries can even come together to build an international resource for global scientific cooperation in AI. Today, 23 countries participate in CERN, the international physics laboratory that uses the most advanced particle accelerators in the world. Nations should do the same for AI, creating a global computing infrastructure for scientists to interact with the safety of AI, empowering scientists around the world.

The potential of AI is enormous. But to unlock the benefits of AI, society will also have to manage its risks. By controlling the inputs to AI, communities can safely manage AI and build the foundation for a secure and prosperous future. It’s easier than most people think.

#Controlling #easier